Virtual Production

Greenscreen

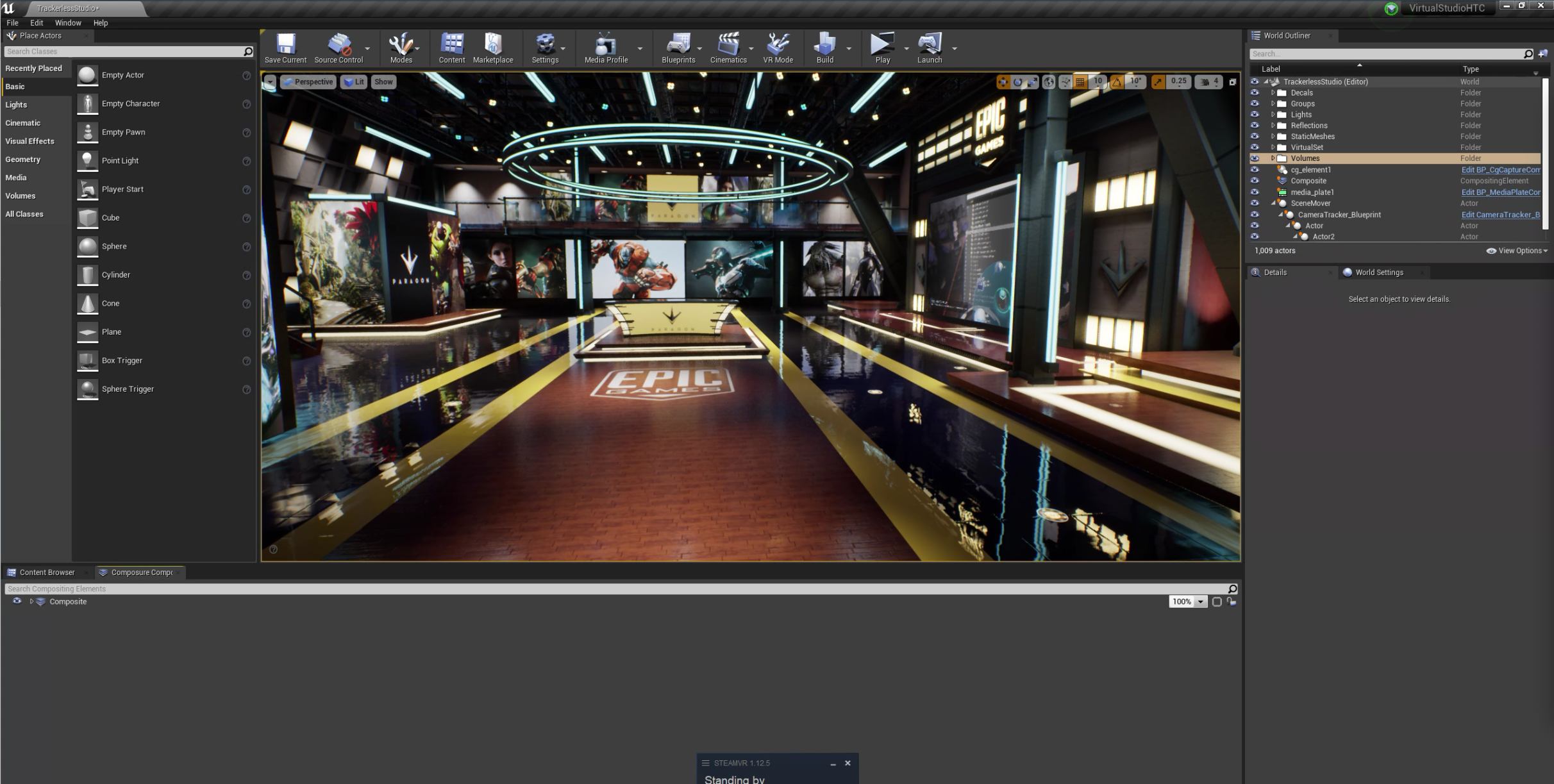

Real-Time Render

Using an HTC Vive, we are tracking the “Real” camera in physical space in all 6-axis. At that point the position & rotation data is sent into Unreal Engine to mimic the movements onto the “Virtual” camera. Every small movement you make is translated into a virtual space that is now rendering in real-time thirty times a second.

At this point we do some good old fashion compositing and your actor is now in a Virtual Production, in real-time, no post processing required.

iPhone Tracking

This was the proof of concept that this was a usable solution. Using the accelerometer & camera built-in the iPhone we were able to track the camera in space and send that into Unreal Engine. Other issues arrised as we were using wi-fi to send the signal, but this show that we could do real-time tracking and compositing using the iPhone.